Hello! For our Angel Hackathon submission, we trained a GAN (Generative Adversarial Network) with EDM MIDI audio files to output music with similar musical elements, such as pitches and durations of notes. Our sourcecode can be found here on Github.

Below are some samples generated by the GAN:

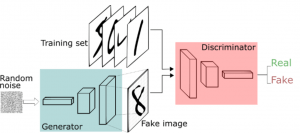

We first used the music21 python package to extract pitches and durations of notes in midi files. Pitches and durations of notes were used to make an image file, where pitches represented a pixel in the y space and durations represented 16th note in the x space. These images were fed into the GAN, which consists of two neural networks, one that attempts to classify “desirable” musical elements, and another that generates images. The image outputs from the model were then changed back into note data describing pitches and durations. These were processed into midi files and finally converted to mp3 files.

Diagram of a GAN taken from https://towardsdatascience.com/generative-adversarial-networks-explained-34472718707a:

Video Explanation of Taking a MIDI Output and Making It Into a Song: