The New Era of Aviation Safety with Cognitive Science

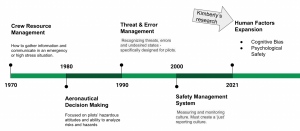

There is a gap in aviation safety systems. Despite technological advancements, the concept of ‘human error’ remains the most cited cause of aviation accidents. In many regards, pilots are trained as if they are machines – in an emergency, we are trained to react both mentally and physically without conscious processing. In emergencies or high-stress situations that do require conscious processing, we’ve adopted the Crew Resource Management (CRM) model to utilize all available information to maximize safety and efficiency. Along with CRM, pilots have Aeronautical Decision Making (ADM) and Threat and Error Management (TEM) in their Human Factors toolbox. Decade after decade we saw a new aviation safety process emerge as some extension of or augmentation to the previous model.

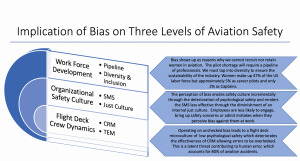

This linear growth is insufficient. These safety systems make an assumption on the flight deck and organizational safety culture. How effective can Crew Resource Management be when a crew member does not feel psychologically safe to speak up? How effective is your Safety Management System when employees don’t trust that a just culture exists? The industry has made great strides in human-automation interface, yet we have not delved into the ramifications of how unconscious bias affects the sociotechnical system in the cockpit (crew working with each other and with the automation). My research will specifically address this gap and offer potential interventions to mitigate these biases and improve the organizational safety culture and the safety culture within the flight deck.

Well-known safety systems, such as Crew Resource Management (CRM) and Safety Management Systems (SMS), were designed on the assumption that the flight deck and workplace culture are inclusive. That isn’t always the case.

Safety Management Systems is aviation’s most holistic attempt at proactive safety by requiring the senior management team to not only create a culture where employees feel valued and respected but to also continuously monitor and initiate ways of improving said culture. Unfortunately, SMS doesn’t provide a toolkit for exactly how to do this.

To create a positive and just culture, leaders of the organization must create a high level of trust throughout the organization. Trust is rooted in psychological safety, meaning individuals feel comfortable to be themselves without fear of negative consequences of self-image or status. In psychologically safe teams, individuals feel accepted and respected. These types of teams are safer and make better decisions because team members feel comfortable speaking up, admitting mistakes, and bringing up safety concerns.

Nothing erodes psychological safety than the perpetuation of bias. A Harvard Business Review study found that employees who perceived bias against them at work were likely to disengage from work, leaving them feeling angry and less proud of their organization. Additionally, they were three times more likely to quit their job within the year. There’s a determent to safety in a micro view within the attitudes of individuals at the organization and in a macro view of low retention rates. The perception of bias erodes safety culture incrementally through the deterioration of psychological safety and renders the SMS less effective through the diminishment of internal just culture.

Understanding how our biases impact others and how we are impacted when we perceive bias directly affects safety. Our ability to communicate, our likelihood of self-reporting, and our view of a just culture are all impacted by cognitive biases. Currently, an organization’s bias training (if any) stems from Human Resources and is either through the lens of social values (basic human decency – we ought to all be kinder to each other) or through an instrumental lens (diversity and inclusion increase creativity, efficiency, and profitability). While these perspectives are important, their direct relevance to safety – both within the flight deck and through the organization’s safety culture – has yet to be researched.

It is time the industry takes an interdisciplinary approach for a more comprehensive proactive safety system with cognitive processing as its fulcrum. For cognitive science research to accurately inform aviation, someone within the industry needs to be in the ‘flight deck’ navigating the research. I hope to do just that.

RESEARCH GOALS

- Enhance flight deck safety (reduction of pilot error as causation for incidents/accidents).

- Improve organizational safety culture (strengthen Safety Management System – SMS).

- Support the sustainability of the aviation and aerospace industry by investing in human capital through inclusive workforce development strategies to expand the diversity through the aviation and aerospace industry.

To read more:

https://www.kimberly-perkins.com/